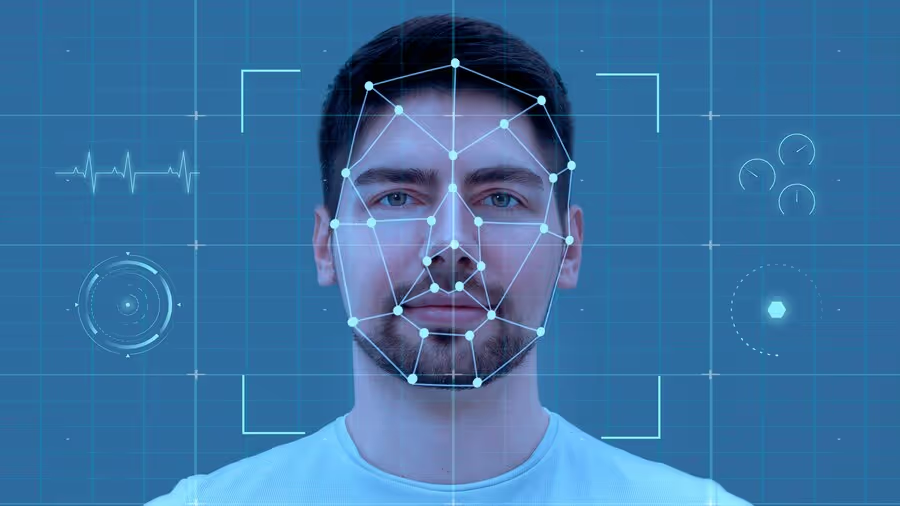

Facial recognition software has made a big leap in healthcare, especially in areas like patient monitoring, mental health, and remote diagnostics. By analyzing subtle facial cues and matching them to emotional profiles, this technology helps providers recognize how a patient might be feeling without relying only on words. But it's not perfect. Sometimes it gets emotions wrong, and when that happens in a healthcare setting, it can create confusion or even lead to poor communication between patients and medical staff.

Misreads can happen for a number of reasons, and they’re not always obvious. You might assume the software is broken or that the camera isn't working right, but it usually comes down to how the system was trained and what's happening in the environment. From facial expressions that aren't represented well during development, to room lighting and camera angles, there are several reasons the software might make the wrong call.

Misinterpretation Due to Diverse Facial Expressions

One major reason facial emotion recognition software makes incorrect judgments is because human expressions aren’t universal. Smiles, frowns, even expressions of surprise vary widely across cultures and individuals. An expression that signals discomfort in one community might appear as polite interest in another. When AI models aren’t trained with a broad enough range of facial examples, they miss these important differences.

Most emotion recognition tools rely on data sets filled with standard images of faces showing basic emotions like happy, sad, angry, or fearful. But life rarely presents such clearly defined feelings. Instead, people show mixed emotions, subtle looks, or expressions unique to their personality or culture. If those nuances weren’t part of the training, the software isn’t likely to understand them.

This is especially tricky in healthcare, where reading emotions accurately matters a lot. A misread might cause a clinician to think a patient is disengaged when they’re simply processing information quietly. Even trained staff can pick up on subtle cues that software might completely skip. When the software misinterprets those cues, the result can be a mismatch in communication or, worse, care decisions based on the wrong emotional read.

Here are a few common issues related to diversity:

1. Limited representation of ethnic and cultural facial expressions in training data

2. Inconsistent definitions of emotions across age groups or lifestyles

3. Over-reliance on standardized emotion images that don’t match real-world responses

4. Gender differences in expression that affect how the software interprets emotion

Bias in data isn’t always intentional, but its effects are real. Software developers need to push for more inclusive data sets that reflect actual users if we’re going to improve how these systems perform across all patient groups.

Environmental Factors Affecting Accuracy

Another part of the problem lies in the space where facial recognition happens. These systems are sensitive to their surroundings, and even small changes in lighting, shadows, or camera position can throw off the results. You could have the clearest camera and the most advanced software, but if the lighting in the room isn’t ideal, your results may still be off.

Here are some everyday scenarios that can mess with accuracy:

1. Overhead lighting creating shadows that distort expressions

2. Dim rooms where facial features aren’t well-defined

3. Bright backlighting that causes glare or washes out details

4. Cameras placed at awkward angles, like too low or too high

5. Reflections from glasses or screens that change how the software reads facial points

For example, imagine trying to analyze a patient over video call in a dimly lit room. Even if the patient is looking directly into the camera, their expression might be partially hidden, causing the software to misinterpret something as a neutral mood when it's actually frustration or worry.

These systems need clear and consistent visuals to pick up on subtle emotional signs. When the environment doesn’t provide that, it’s like asking someone to read lips in a room full of fog. Making small adjustments to camera placement or lighting can help, but the tech still has to handle less-than-ideal conditions. Otherwise, the promise of emotion recognition in healthcare remains limited to perfect settings, not real ones.

Technological Limitations and Data Bias

A significant issue within facial emotion recognition software centers on the limitations of technology and bias in data. When these systems rely heavily on small or limited data sets, they don't fully capture the wide spectrum of human emotion. Many AI programs are trained using a set number of images, often failing to account for different skin tones, facial structures, or expressions that fall outside the norm.

Bias can creep in when the data for training isn't representative. For instance, if the software's training data lacks diversity in age, ethnicity, or gender, its ability to interpret emotions accurately across all groups becomes compromised. This leads to skewed analysis, where the software might read a neutral expression as angry, simply because it's outside the range of what it's been taught to understand.

To improve accuracy, it's important to expand the data sets to include a broader variety of human faces and expressions. Making sure the training data reflects real-world diversity can bridge this gap. Continual updates and revisions in the AI’s learning models also help. Without such updates, the software risks falling behind in accurately reading and understanding the emotions of individuals from different backgrounds.

Emotional Nuances and Context

Our emotions don't exist in a vacuum. They're influenced by countless factors, including context, situation, and individual personality. Facial recognition software, however, often overlooks these nuances. It assesses expressions as though they exist in isolation, detached from context or environment. Yet a smile can indicate happiness, sarcasm, or even nervousness depending on the situation.

Recognizing these emotional subtleties requires more than analyzing facial expressions alone. For example, a patient might furrow their brow while listening to medical advice, which might seem like concern. But if they are also nodding, it could indicate they are actually engrossed in understanding.

This adds a layer of complexity. Facial recognition must adapt to recognize patterns and connections between expressions and context. This could involve coupling face reading with other data streams, such as speech tone or physiological indicators, to provide a fuller picture of emotional state. Without incorporating context, software remains prone to errors, unable to make the accurate interpretations needed in delicate settings like healthcare.

Enhancing Your System’s Accuracy

To tackle these challenges, adopting a few strategies can boost the accuracy of your facial recognition software. One of the most practical steps is customizing your clinical environment to reduce distractions like poor lighting or odd camera angles. Creating stable and consistent conditions helps the software do what it’s designed to do—read expressions accurately.

Keeping the technology updated also makes a big difference. Regular software updates allow it to learn from more recent data and adapt to the way people express emotion today. Expanding training sets with varied and diverse facial expressions is also important. By doing so, the system can become better at understanding the full range of emotions seen in real healthcare settings.

At the end of the day, software works best when paired with human insight. Medical staff can recognize when something doesn’t look quite right and make a judgment call the software might miss. Blending AI with human experience can raise the quality and reliability of emotional recognition.

Human Connection Starts with Better Recognition

Effective emotion recognition can lead to more compassionate care. It's not just about reading a face, it's about understanding a person. In healthcare, that kind of insight helps build trust and leads to better patient outcomes.

As facial emotion recognition software improves, so does the ability to connect with people beyond the surface. Addressing its current limitations, from lighting conditions to data bias, opens the door for more reliable emotion assessment. By continuing to train AI on broader data, refining tech environments, and blending human intuition with machine precision, healthcare professionals can respond more effectively to patient needs. It's a step toward deeper understanding and more responsive care.

If you’re looking to support stronger patient relationships through technology, Upvio’s tools can help improve interactions and outcomes by accurately identifying emotional cues. Learn how your practice can benefit from using facial emotion recognition software to better understand patient needs and enhance the care experience.